The emergence of content of models generated by artificial intelligence (AI), such as the generative pretrained transformer chatbot (ChatGPT), not only signifies a new phase in content creation but also indicates that AI-driven creation is becoming a focal point of cultural and technological innovation. The applications of models generated by artificial intelligence are increasingly widespread globally (Wei, 2024), offering unprecedented possibilities for creation. This enables creators to delegate parts of the creative process to AI systems through cognitive outsourcing, whereby cognitive tasks, such as information processing and decision making, are delegated to external systems or tools (e.g., AI) to enhance efficiency and save resources (Ahlstrom-Vij, 2016). From the perspective of cognitive psychology, cognitive outsourcing supports higher level thinking processes, such as creative and critical thinking, by alleviating the cognitive load on the human brain. Through cognitive outsourcing, creators can transcend the limits of traditional thinking, leveraging the capabilities of AI to expand their creative thinking and elevate artistic creation.

Cognitive outsourcing is increasingly important in the era of the digital generation, primarily because of its role in adapting to rapid information environment changes and effectively managing innovation and intellectual property. As detailed by Melnyk (2023), this practice is characterized by clip thinking, where information is processed in fragmented snippets, leading to a high-speed but superficial engagement with content. Although this can enhance information-processing efficiency and reduce costs, allowing organizations to focus on core competencies (Kakabadse & Kakabadse, 2005), it also comes with significant drawbacks. Overreliance on external cognitive resources can diminish critical cognitive abilities, such as attention span and critical thinking (Melnyk, 2023), and lead to knowledge and competence drain, which can hinder long-term innovation (Edvardsson & Durst, 2020). Therefore, cognitive outsourcing requires careful management to mitigate its potential negative impacts on cognitive development and organizational knowledge.

In studies based on tools like large language models, such as ChatGPT, the notion of cognitive outsourcing has emerged as particularly significant. Currently, AI is moving beyond academic laboratories into practical applications, including smart recommendations, speech recognition, and image processing (Chiu et al., 2024). As a strategic approach, cognitive outsourcing enables creators to direct their focus toward ideation and the nuanced adjustment of artistic expression, entrusting repetitive or technical tasks like data processing and content generation to AI.

However, to date, no research has been conducted to quantitatively and systematically measure the behavior of cognitive outsourcing. The widespread use of large language model tools has revolutionized traditional human–computer interaction concepts and facilitated a paradigm shift in information dissemination (Huang et al., 2024). In this collaborative model, AI acts not only as an executor but also as a participant, with the content generated by AI in turn influencing and inspiring the creators’ ideas. This interactive process requires creators to possess artistic intuition and technical knowledge, and also to have an understanding of the workings and capabilities of AI in order to be able to better control the creative process and outcome.

By exploring the concept and scope of cognitive outsourcing, a deeper understanding can be gained of how AI technology can extend human creativity and how this extension influences the essence and value of artistic creation. Therefore, exploration of the concept of cognitive outsourcing is both necessary and significant. It is imperative for the academic community to clarify what constitutes the concept and its dimensions, and to construct a scientific measure of the elements. In this study we developed a scale designed to measure the extent and ways in which creators outsource cognitive tasks to AI technologies. Our aim was to identify how users perceive the credibility and reliability of AI tools, such as a large language model like ChatGPT, and how these perceptions impact their decision to delegate creative processes to these tools.

Distributed Cognition Theory

The theoretical foundation of cognitive outsourcing is grounded in the concept of distributed cognition (Liu et al., 2008). Distributed cognition theory provides a comprehensive and dynamic perspective for cognitive science, emphasizing the social and situational nature of cognitive processes. This perspective supports the concept of cognitive outsourcing by highlighting how cognitive activities are not confined within the individual’s brain but span the boundaries between individuals and their environment (Hutchins & Klausen, 1996). Rogers (1997) noted the constraints of human short-term memory in processing information, with a limited capacity for the amount of information an individual can hold in their memory at one time. Cognitive chunking is a concept that refers to the process by which individuals group together pieces of information into larger units or chunks. Cognitive chunking helps to overcome this memory limitation by reducing the number of items that need to be held in short-term memory at any one time (Fellbaum, 2013). This cognitive chunking implies the packaging of cognition and other social affairs into certain procedures, which also form the theoretical basis for cognitive outsourcing.

Ahlstrom-Vij (2016) discussed human reliance on external sources of information and raised questions about the epistemological implications of cognitive outsourcing, subsequently examining whether cognitive outsourcing undermines individual cognitive autonomy and debating whether this constitutes a cognitive issue. In the context of AI-driven creation, human cognitive abilities are not confined to internal brain processes but can be extended and enhanced through external tools and technologies. Tools and technology are regarded as integral components of the cognitive process, capable of transforming people’s ways of thinking and cognitive capacities.

The Psychological Process of Cognitive Outsourcing

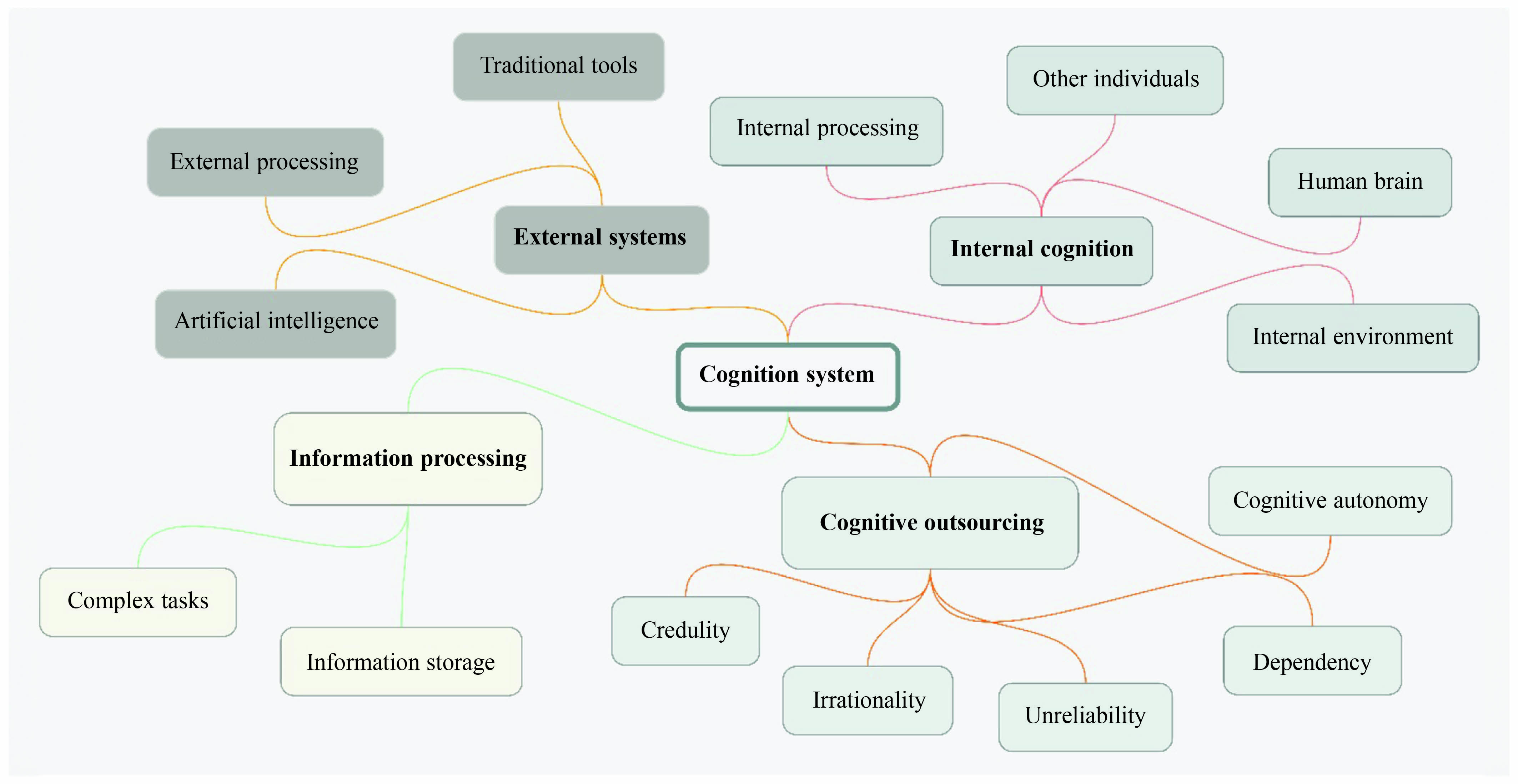

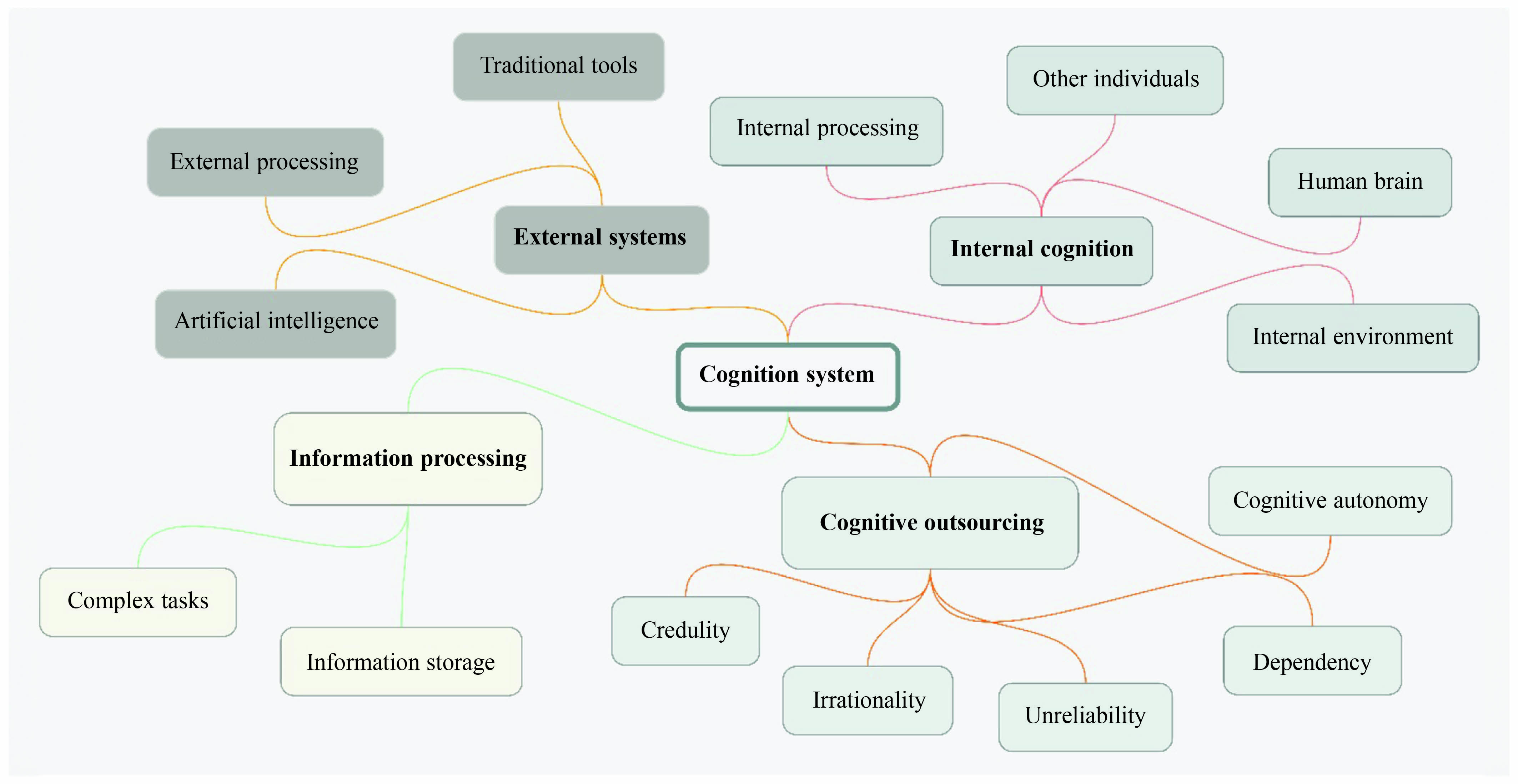

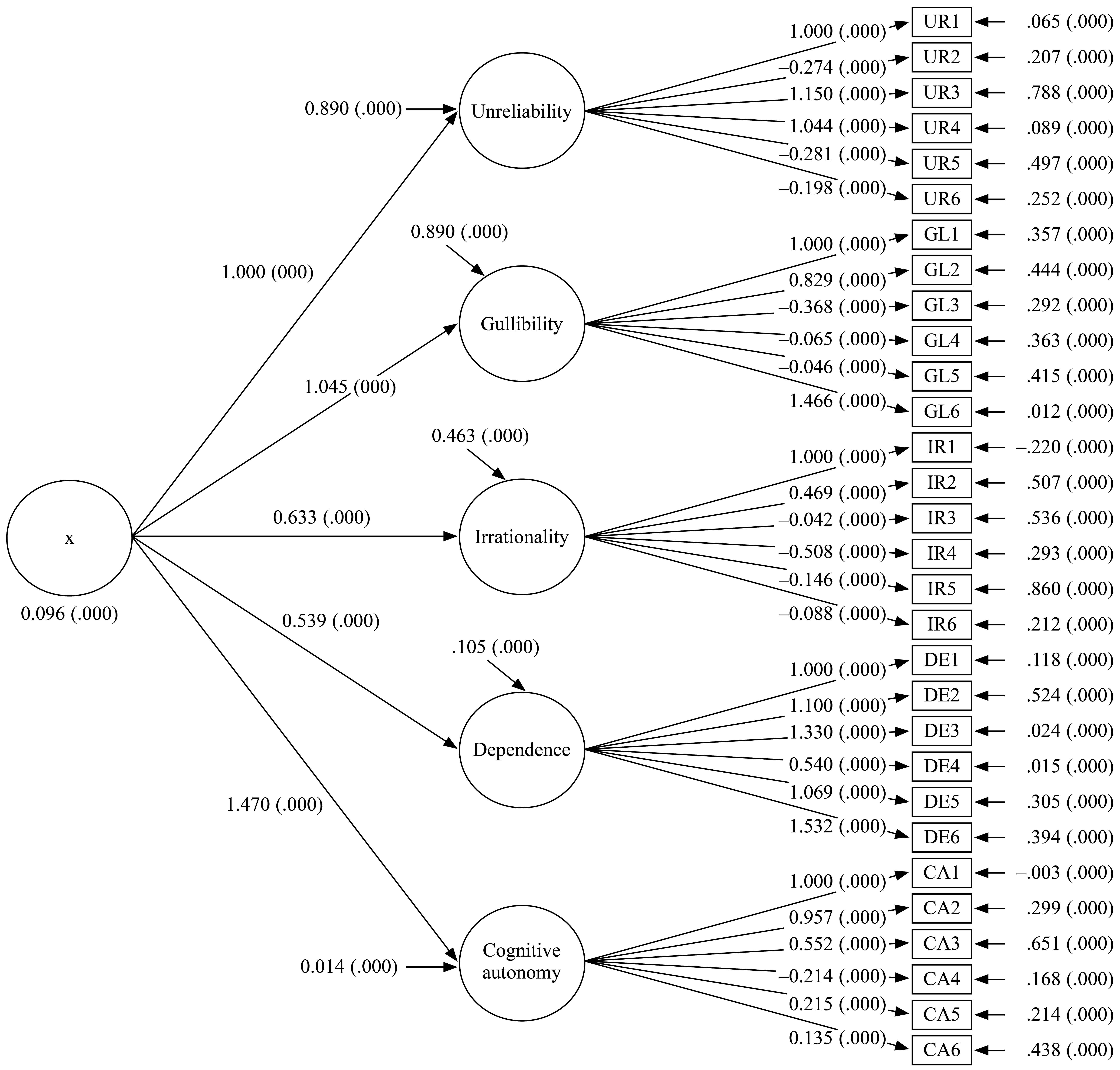

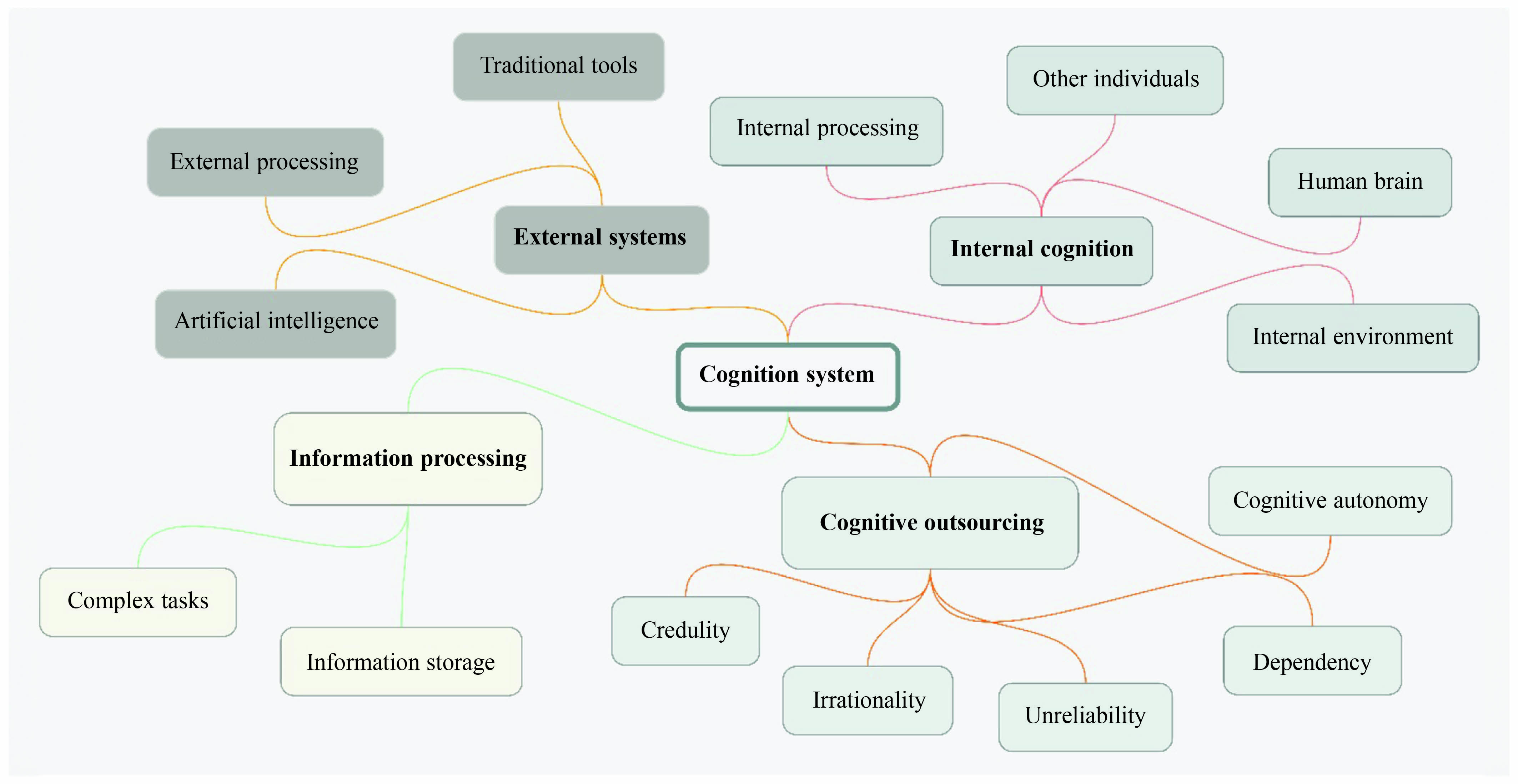

The psychological process of cognitive outsourcing involves a series of complex cognitive and emotional mechanisms that prompt individuals to transfer certain thinking tasks to external entities or tools (Wells & Matthews, 1996). In the context of artistic creation, especially when using AI creative tools like ChatGPT, this process encompasses not only the allocation and execution of tasks but also a deep interaction of creative thinking and emotional expression (Roumeliotis & Tselikas, 2023). Drawing from the process model of distributed cognition, we viewed cognitive outsourcing as a multidimensional psychological process (see Figure 1).

Figure 1. The Model of Cognitive Outsourcing (Created by ChatGPT4)

Internal cognition represents the cognitive processes within an individual, encompassing attention, memory, and thinking. The precondition for cognitive outsourcing is that individuals perform an internal cognitive evaluation of the utility and technical support of task-based cognitive outsourcing (Ward, 2003). Our aim in this study was to determine the factors that contribute to this evaluation, and then to develop a scale and measure these factors.

Cognitive outsourcing occurs when individuals transfer part of the internal cognitive processes to an external system (computational support). During this period, general natural laws, social culture, and common sense (Purkhardt, 1993)—which, along with other individuals, make up the social environment—will influence internal cognition to align with the final decision-making deployment (Proulx et al., 2016). After the individual completes the allocation of information processing, the external system aids in information search, organization, filtering, and decision making. Scholars have proposed that this reduces the direct cognitive load an individual needs to draw on (Wu & Zhao, 2020), for instance by using search engines to locate information or employing AI to accomplish tasks. At the same time, the information that the external system provides assists the individual in making a more rational or efficient decision.

Cognitive Instrumentalism and the Use of Artificial Intelligence Creative Tools

In the realm of research on cognitive outsourcing, scholars have focused on how outsourcing can free up cognitive resources for other tasks, while also raising concerns about the decline in knowledge depth and memory capacity (Bai et al., 2023). Other studies have discussed how individuals rely on external systems for complex decision making and problem solving. This includes not only the search for and processing of information but also the degree of trust and dependency on these external sources (Glikson & Williams Woolley, 2020). Research has indicated that as technology advances, the cognitive tasks outsourced become increasingly complex, thereby profoundly affecting individual cognitive capacities and knowledge structures (Nagam, 2023).

The nonlinear thinking and complex pattern-processing abilities of AI provide new perspectives and inspiration for creativity, and facilitate creators by enabling outsourcing of specific creative tasks, offering rapid information access, automated task processing, and complex decision support (Gorelik et al., 2020). Scholars have also studied how the proliferation of these technologies is affecting the social cognitive structure, including changes in knowledge distribution and social interaction patterns. Tufekci (2018) pointed out how algorithms on X (formerly Twitter) shape social interactions between users by recommending specific types of content to influence opinion formation. Tools like ChatGPT may play a similar role in online collaboration, influencing cooperation and communication among users through content generation.

Furthermore, Pariser (2012) addressed the phenomenon of algorithms that filter information based on user preferences. ChatGPT could be used for automated information filtering, generating content based on user inputs, which might lead users to rely on algorithms to select information, thus shaping their way of acquiring information. In terms of cognitive outsourcing and social impact, Turkle (1995) examined people’s reliance on smart assistants and chatbots like Siri and Alexa, and suggested that such dependence might lead to more interactions with machines rather than genuine human engagements, thereby changing the nature of social interactions.

In summary, the advent of AI tools like ChatGPT has altered the cognitive processes of individuals and the ways in which people interact socially with each other. These are issues of significant importance in the social cognitive structure and call for the construction of a scientific cognitive outsourcing measure to better understand the impact of AI tools on cognitive and social processes.

Method

Collection of Baseline Corpus for a Cognitive Outsourcing Scale

We employed a combination of self-report scales and standardized scales, developed and validated through rigorous research methods to ensure the reliability and validity of the measurement results, with the aim of making a more accurate assessment and gaining greater understanding of the dynamics and impact of cognitive outsourcing in AI creation.

To construct a scientific and reliable measurement tool for cognitive outsourcing, the first step in research is accumulating a substantial corpus of text related to cognitive outsourcing. We collected a large amount of text related to cognitive outsourcing through online interviews and surveys. In the interview phase, we gathered approximately 100,000 characters of manuscripts, from which we extracted potential dimensions to construct a cognitive outsourcing measurement system.

In the second part, we tested the validity of the cognitive outsourcing items we had developed with a larger group through a survey, leading to the development of a scientifically reliable cognitive outsourcing scale. The first phase lasted for just over 1 month, and the second phase took about 1 week. The respondents were mostly university faculty members, students, and media practitioners. The survey included items on the extent of participants’ involvement in cognitive outsourcing, the types of tasks, and the duration of involvement in cognitive outsourcing tasks over the past week. The distribution channels for the screening questionnaire included dedicated AI creation forums and WeChat groups. As of the latest data from 2023, WeChat had approximately 1.3 billion monthly active users globally. This figure had shown a slight increase over the year, indicating the platform’s continued popularity and reach in China (Adavelli, 2024). Articles on AI and large language models are extensively discussed on this platform, numbering in the hundreds of millions. Deploying a survey within the AI discussion community on this platform is highly representative of AI users generally. Through this survey, our aim was to collect a set of data that was as diverse and rich as possible by including participants with the greatest variability in cognitive outsourcing behaviors in the process of creation.

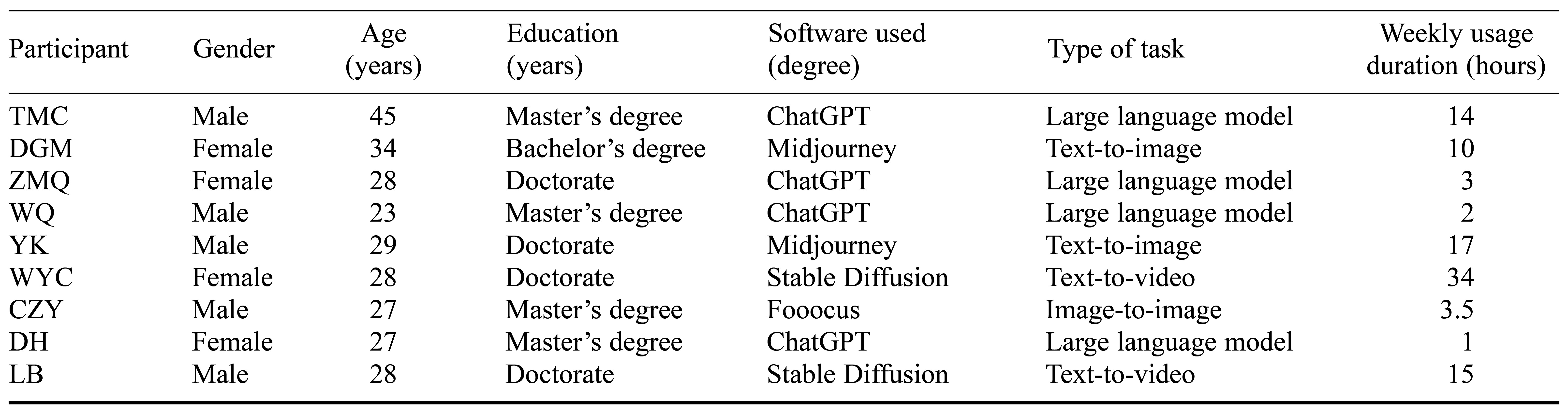

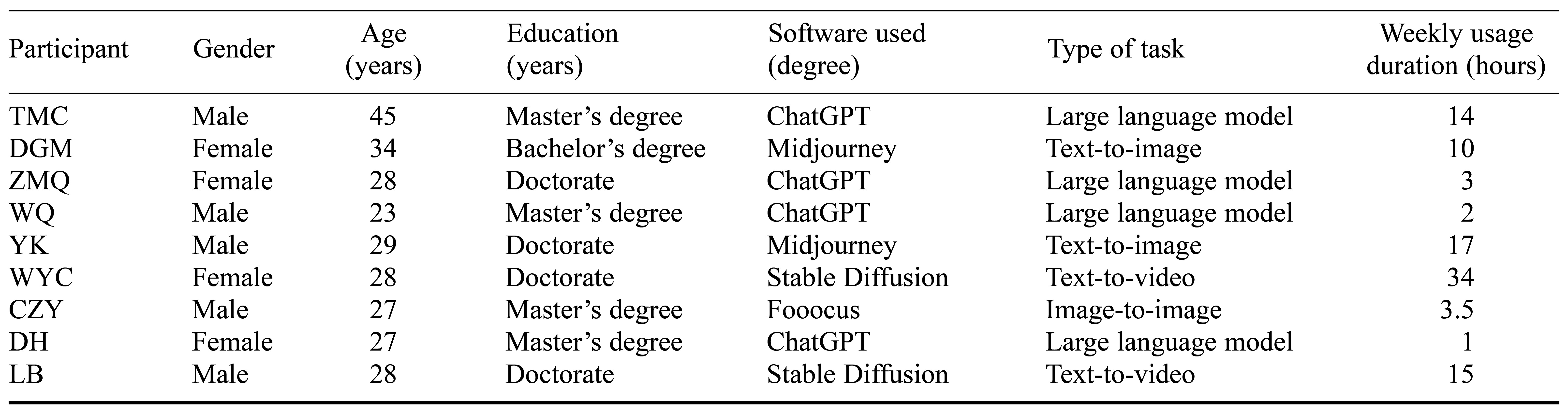

Interviews

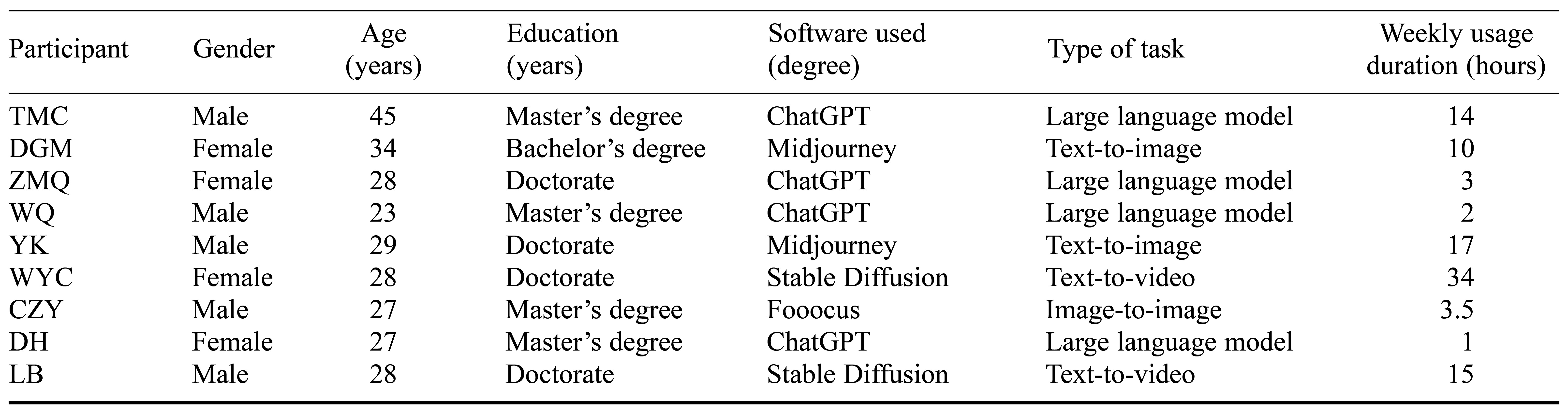

We conducted semistructured interviews with 10 individuals who had used AI creation tools. The respondents all had substantial experience in models generated by artificial intelligence, large models, and AI practice. Their specific professions included professors at Tsinghua University (mainly in the Schools of Computer Science and Journalism), senior engineers at Tencent and Alibaba, and senior reporters at the Xinhua News Agency. All respondents were invited to participate in the interviews and provided enthusiastic feedback based on their personal experiences. Some did not use AI tools extensively on a daily basis, but their daily engagement with AI technology development provided a representative cross-section of academia, media, and corporate sectors. The interviews provided enough depth and breadth of information to fully understand the dimensions of cognitive outsourcing to AI, and were concluded when additional conversations with new or existing participants began to yield repetitive or no new relevant information (Zhang, 2021). The collected information included demographics, types of AI software used, common task types, and the average usage duration per week (see Table 1). The 10 interviewees reported an average of 9.95 hours per week spent on AI creation.

Table 1. Demographic Information of Interviewees

Interview Analysis and Scale Item Development

We primarily questioned users about their cognitive perceptions and collaborative behaviors during the AI creation process. Following grounded theory, we imported the information obtained from the interviews into Nvivo 11.0. The analysis involved two main steps: open coding and axial coding. In open coding, the data are broken down into discrete parts and examined closely to identify key concepts and categories. Axial coding is then applied to relate these categories to each other, refining and organizing them into a coherent structure. This systematic process ultimately resulted in the development of items for the proposed scale that were grounded in empirical data and accurately reflected the participants’ experiences and insights.

Unreliability

Unreliability constitutes a critical dimension in assessing the dependability and quality of the content of AI tools, with a focus on the tool’s authority, historical performance, and credibility, as well as the consistency and accuracy of the generated content. In studying knowledge communities within the metaverse, researchers have identified the origin and content generation quality of AI as crucial core influencing factors in users’ sustained academic interaction behaviors (Song et al., 2023). The people we interviewed often discussed their initial usage of AI tools, resorting to forums or conducting independent searches for information on their generative outcomes.

| |

“I had frequent issues with ChatGPT 3.0 before, but after upgrading to 4.0, the situation improved significantly. I deem the information provided by this tool to be more reliable.” (Participant ZMQ)

“I’ve used several language software tools, and I rate them based on my experience. My work often requires producing detailed content, so I consider factors like the software’s accuracy and speed of generation.” (Participant TMC)

|

Gullibility

Gullibility encompasses the extent to which individuals accept erroneous information and their attitude toward verifying information elements during the text-generation process. Our interviewees mentioned encountering inaccuracies in content that were entirely different from authentic information sources. Some gradually shifted from believing in the infallibility of AI to handling its generated content with caution, verifying the authenticity of the results independently.

| |

“Initially, I didn’t pay much attention to these discrepancies, assuming the AI’s outputs were accurate. However, after several evident mistakes, I began to doubt and have since become more cautious.” (Participant LB)

“I don’t wish to disseminate incorrect information, which primarily aligns with my personal principles and professional ethics.” (Participant CZY)

|

Irrationality

Irrationality primarily manifests as an excessive trust in unverified external information sources, neglecting the necessary scrutiny of the reliability and authenticity of these sources. This behavioral pattern reflects a preference for convenience when faced with an abundance of online information, that is, the tendency to accept information that appears persuasive or aligns with personal expectations without thorough verification. Irrational behavior may stem from time pressure, information overload, or excessive confidence in certain tools, leading to the uncritical acceptance of information. Compared with gullibility, irrationality refers to a broader range of behaviors where reasoning deviates from logical norms, including decisions influenced by biases or emotions, not limited to mere belief acceptance.

| |

“I once saw a video claiming that using a few ‘magic bullet’ dialogue templates would yield great results, so I tried it directly, only to find out it wasn’t as simple as that.” (Participant WYC)

“I often maintain a skeptical attitude toward the authenticity of online information, especially when using ChatGPT for academic papers. I’m aware that not everything can be resolved so easily. I try not to trust sources I haven’t personally verified.” (Participant WQ)

|

Dependency

From the responses of our participants, it can be inferred that dependency primarily refers to an individual’s excessive reliance on external tools or resources. This dependency may lead to an overtrust in AI tools, neglecting the importance of enhancing personal skills and independent thinking. Although such dependency can increase efficiency in the short term, it may, in the long run, limit an individual’s capacity for innovation and independent problem solving.

| |

“To be honest, I have developed an emotional dependency on certain software and online platforms. Tools like ChatGPT, which I use almost daily, have become like my right hand.” (Participant DGM)

“Frankly, without these tools, I would probably spend much longer doing it myself.” (Participant DH)

|

Cognitive Autonomy

Cognitive autonomy concerns the ability of individuals to maintain independence in information processing, decision making, and learning, whereas dependency involves reliance on external sources for knowledge and decision making, which can limit an individual’s ability to think critically and independently, potentially stunting cognitive development. From the responses of our participants, it can be summarized that, in the context of AI creation, although AI software significantly enhances work efficiency and decision speed, it also raises concerns regarding the impact on individual cognitive autonomy and dependency issues. Thus, in regard to cognitive autonomy, the importance of maintaining personal autonomy and independence in cognitive processes in a society highly dependent on technology is emphasized.

| |

“Sometimes, I wonder whether my designs truly originate from my own creativity or if they’re overly reliant on AI.” (Participant TMC)

“I’m also contemplating whether this means I’m gradually losing some of my inherent design judgment.” (Participant YK)

|

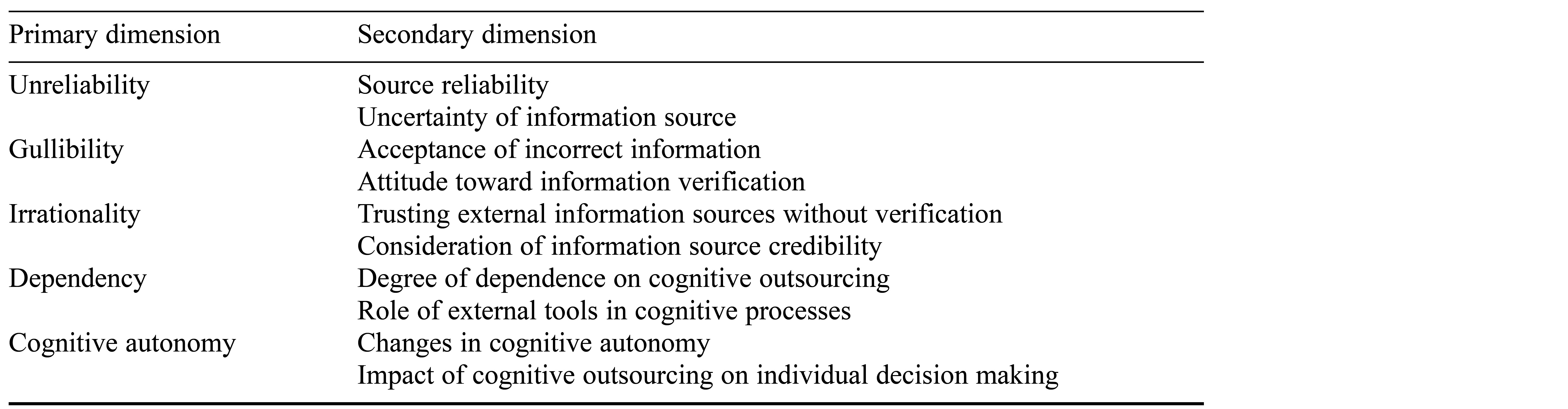

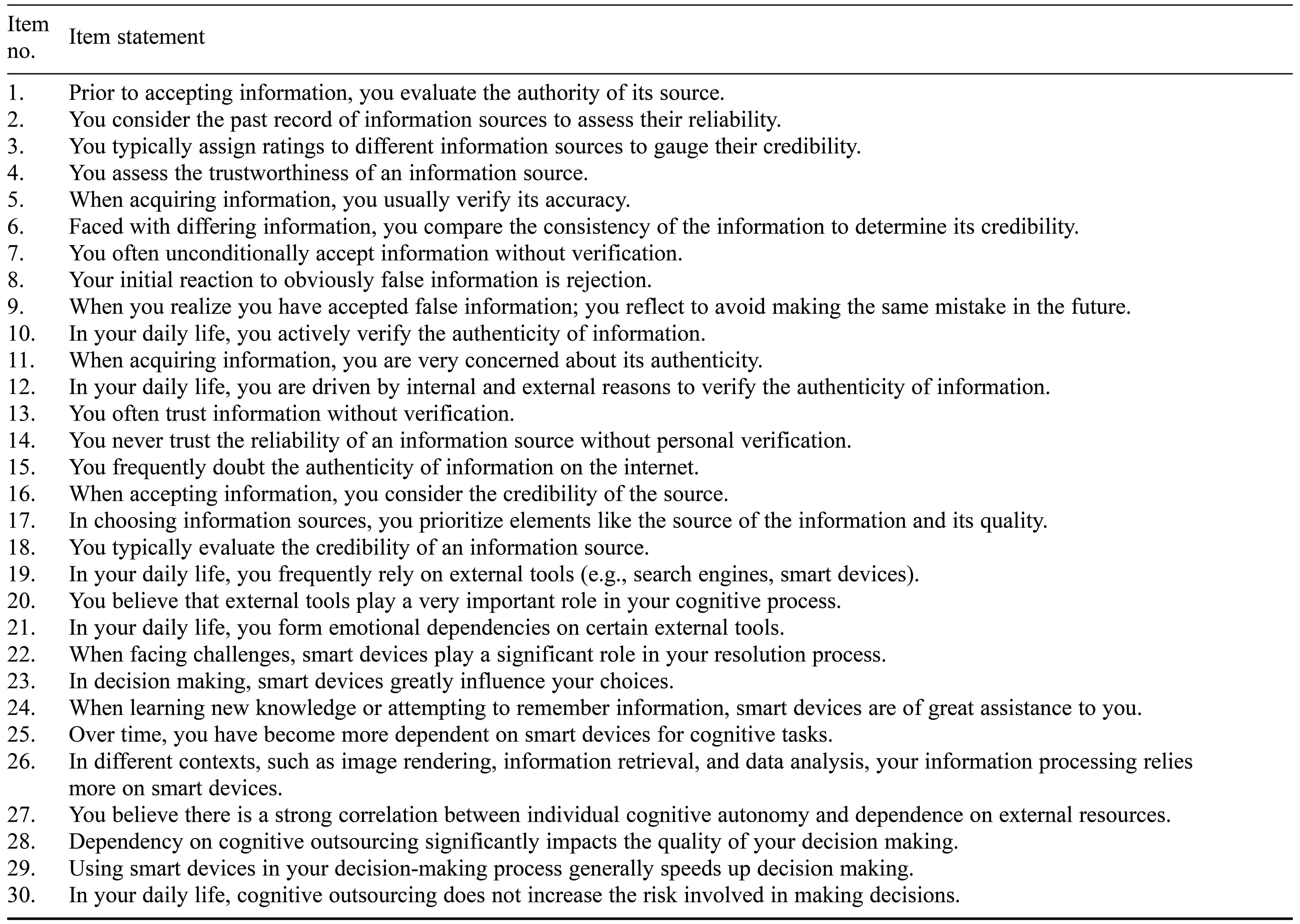

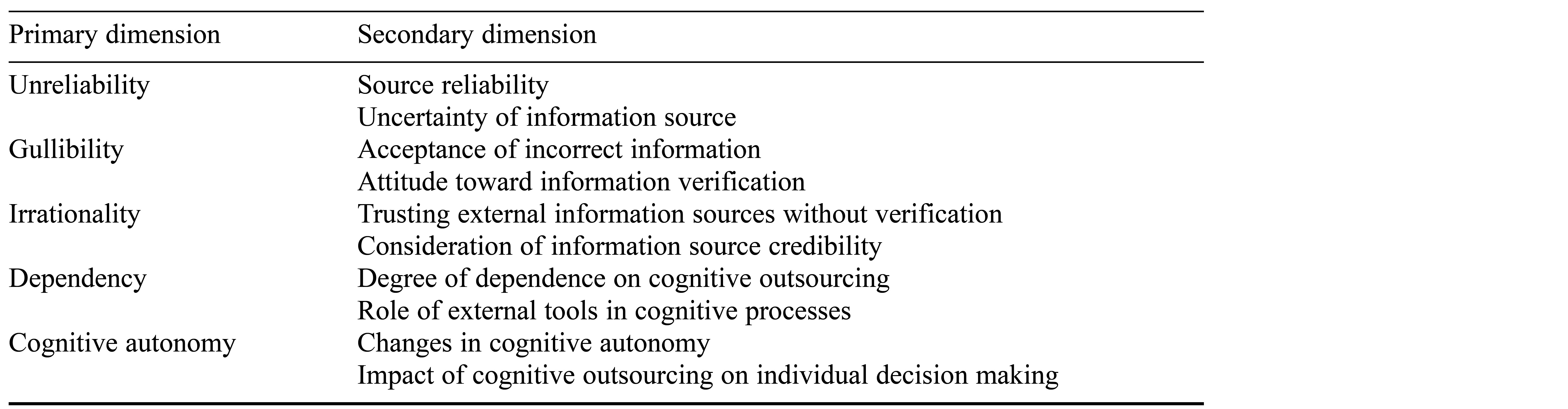

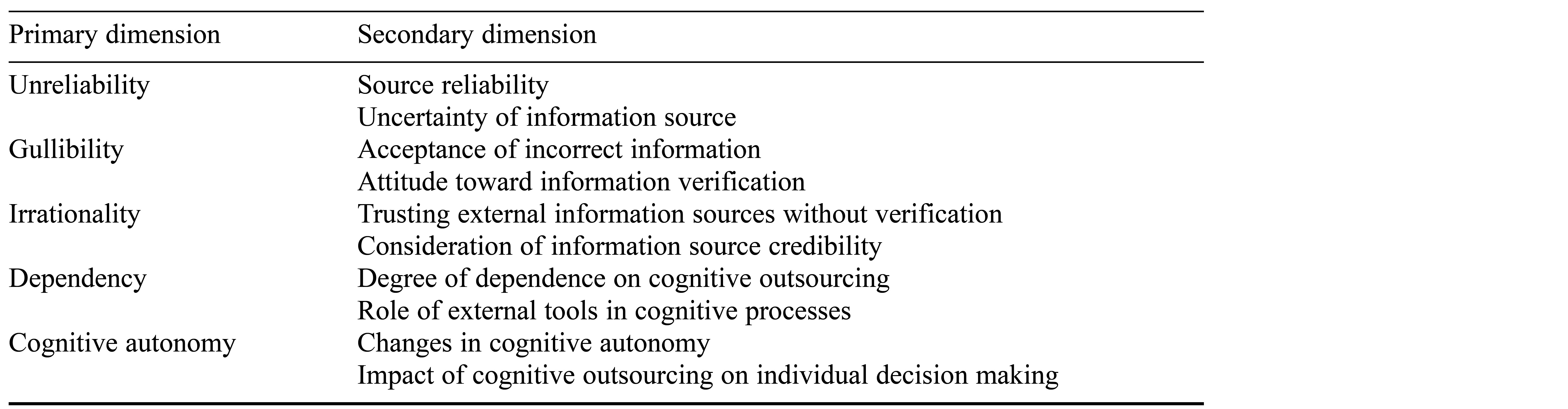

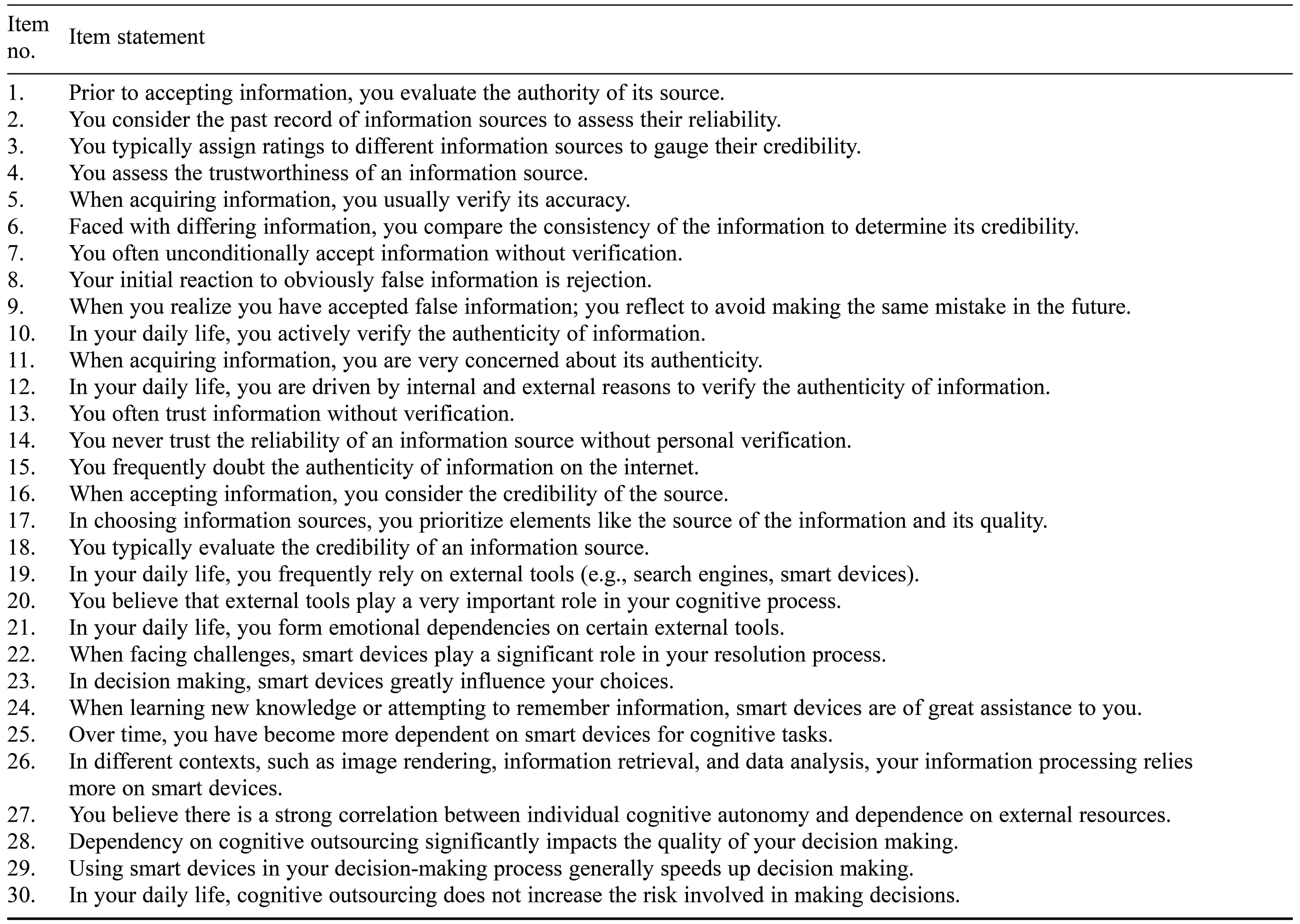

We selected five concepts and developed 30 items for our scale, as shown in Tables 2 and 3.

Table 2. Summary of Dimensions for Concepts in the Cognitive Outsourcing Scale

In developing the items about individuals’ cognitive outsourcing behaviors toward AI, we referenced the methodology of cognitive outsourcing proposed by Ahlstrom-Vij (2016). To facilitate quantitative analysis a 5-point Likert scale format was employed to rate behaviors, ranging from 1 = strongly disagree to 5 = strongly agree.

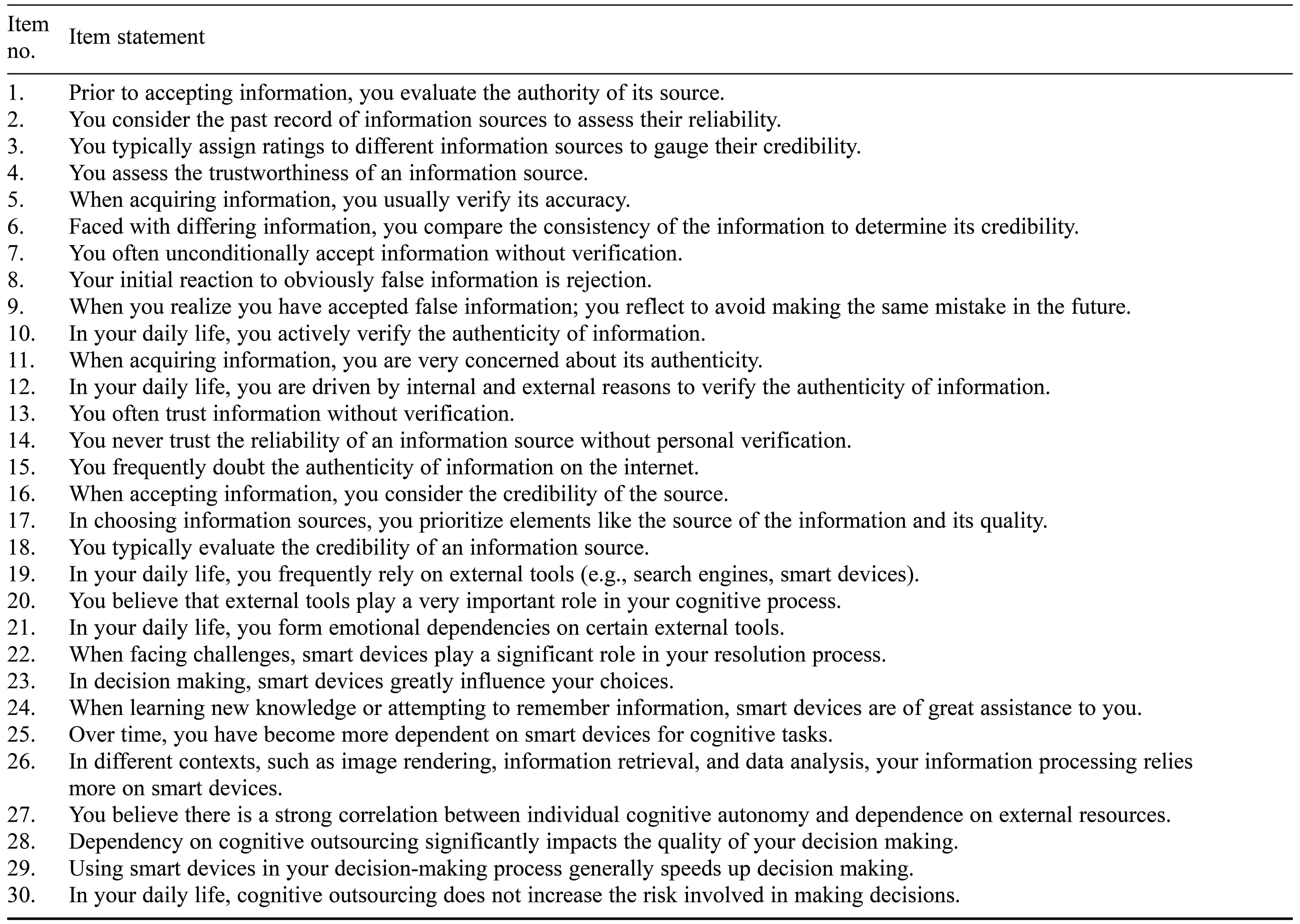

Table 3. Items in the Survey on Cognitive Outsourcing to Artificial Intelligence

Note. Items 7 and 13 are reverse scored.

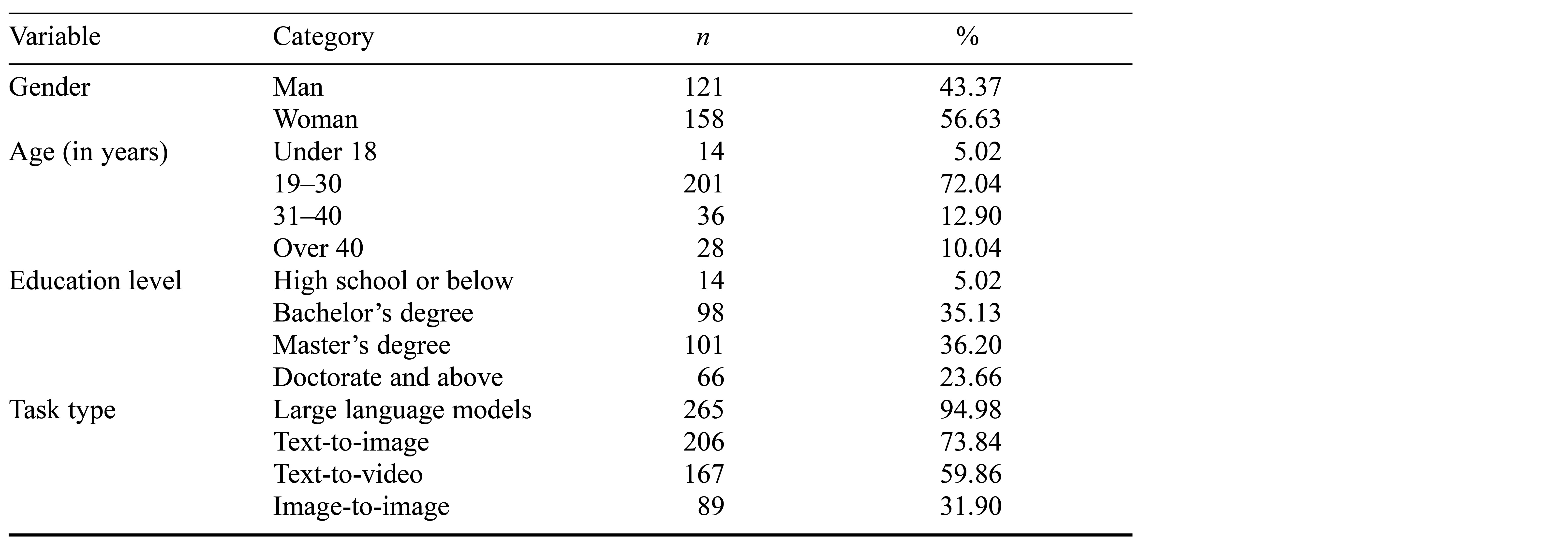

Survey Distribution and Basic Sample Statistics

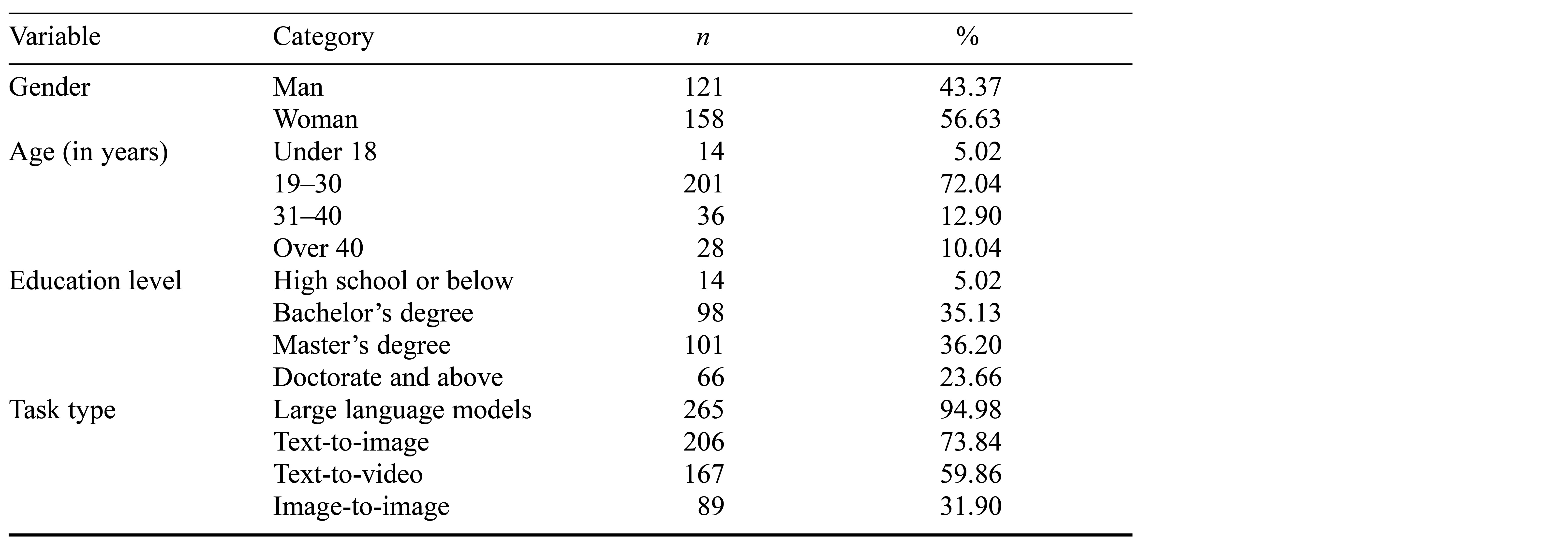

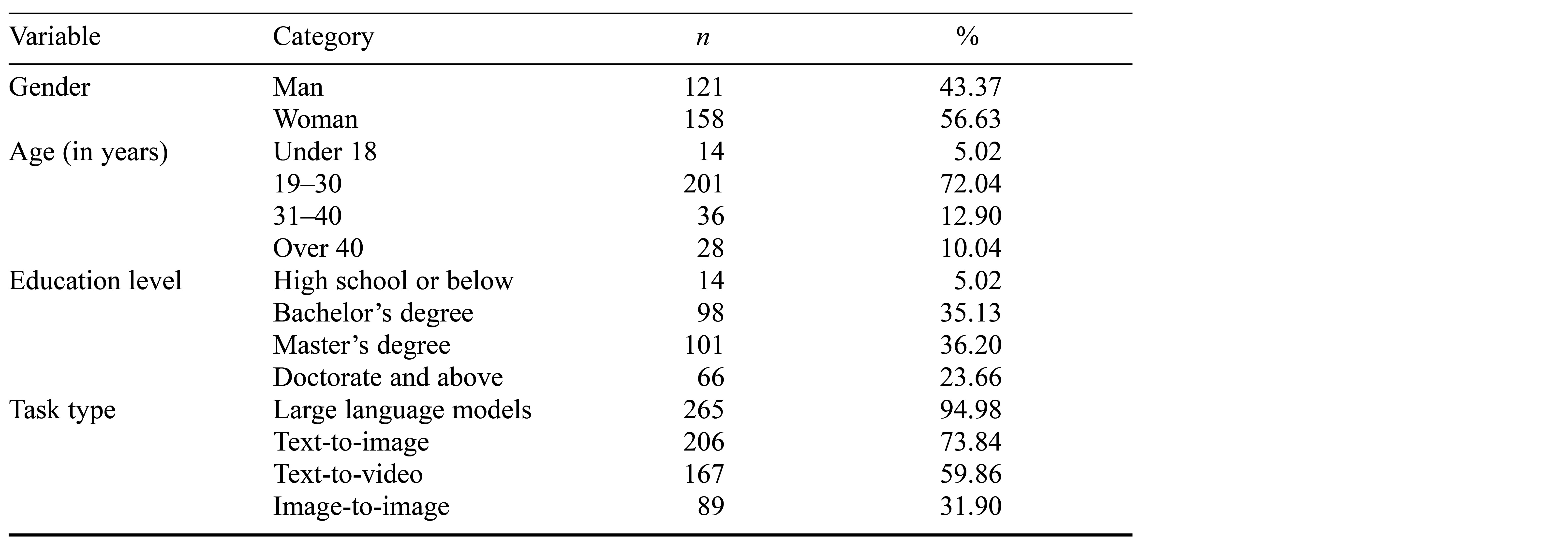

The distribution of the survey began on January 30, 2024, primarily promoted through a snowball method on WeChat, AI forums, and other communities. The collection of survey responses concluded on February 17, 2024, with responses gathered from 306 participants. To ensure data quality, responses from 17 participants were then eliminated because they had completed the survey in less than 2 minutes. Ultimately, 279 valid survey responses were obtained. The demographics of the sample are shown in Table 4.

Table 4. Demographic Description of Survey Sample

Scale Validation and Analysis

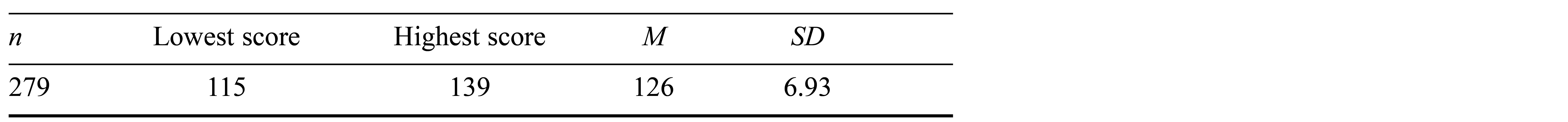

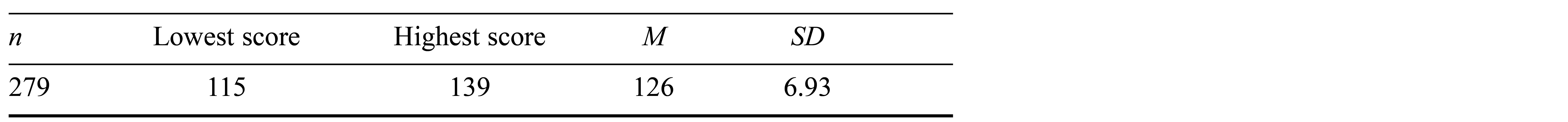

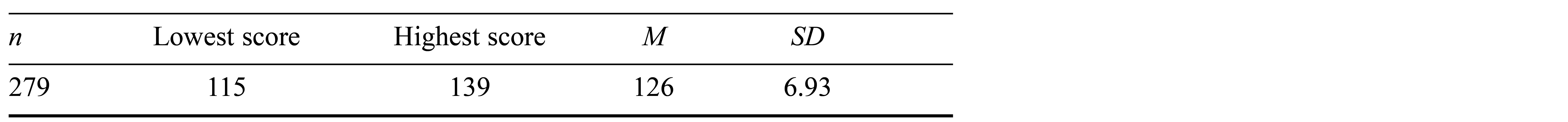

The results of analysis of participants’ outsourcing behavior are shown in Table 5. The highest possible score for the survey was 140 points, and the average score indicates that the overall level of cognitive outsourcing behavior of the sample was relatively high.

Table 5. Descriptive Statistics of Survey Participants’ Cognitive Outsourcing Behavior

Exploratory Factor Analysis

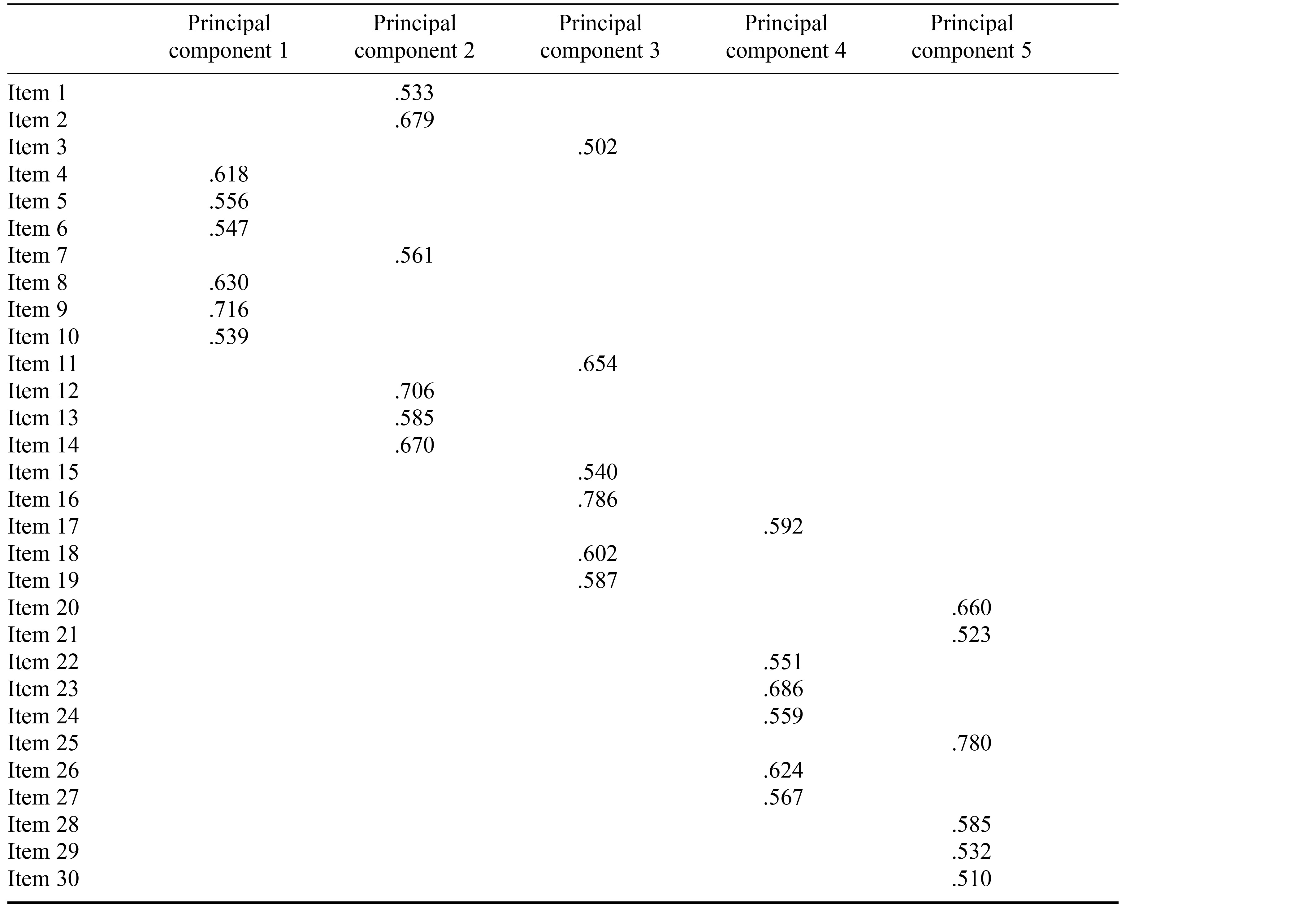

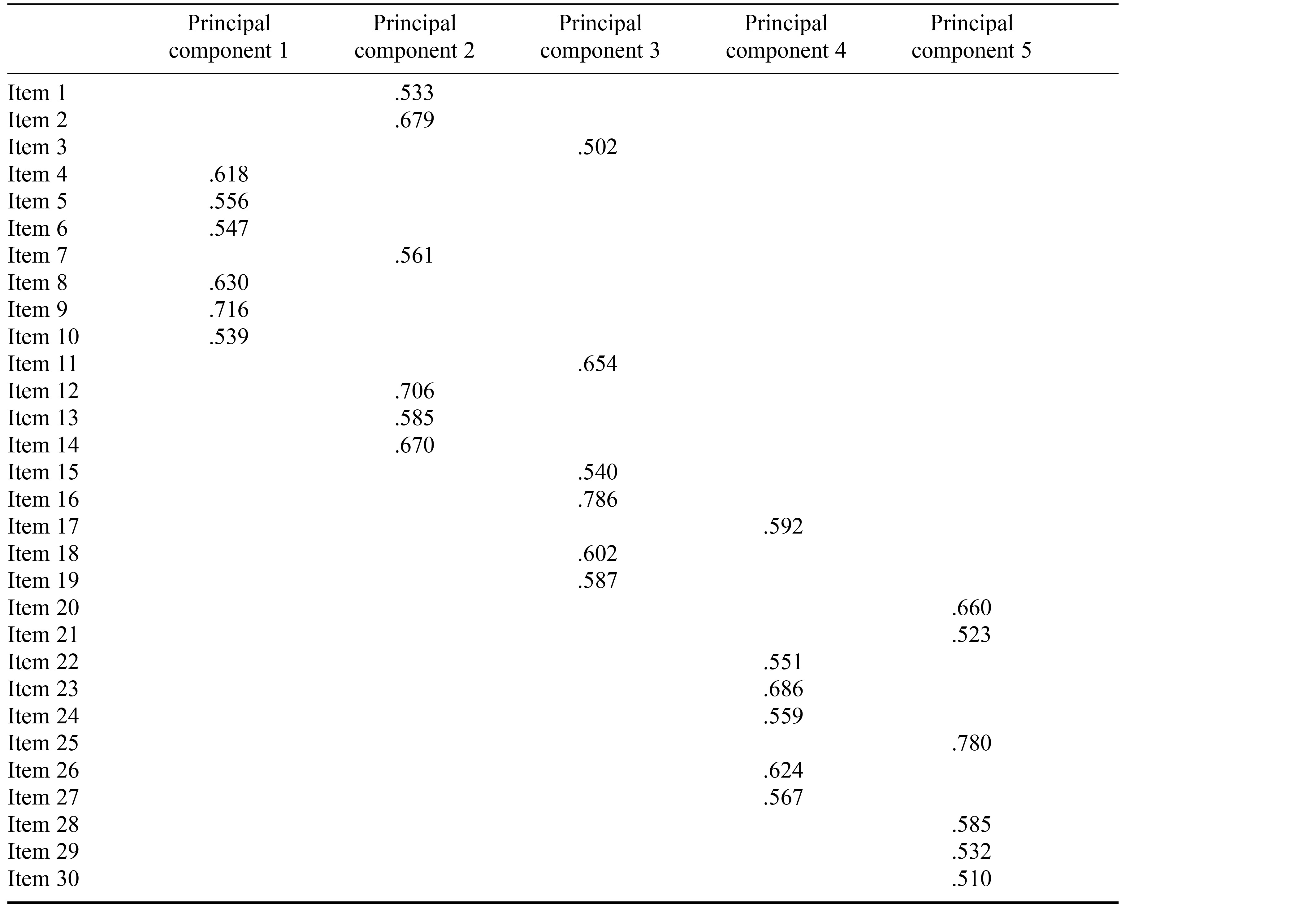

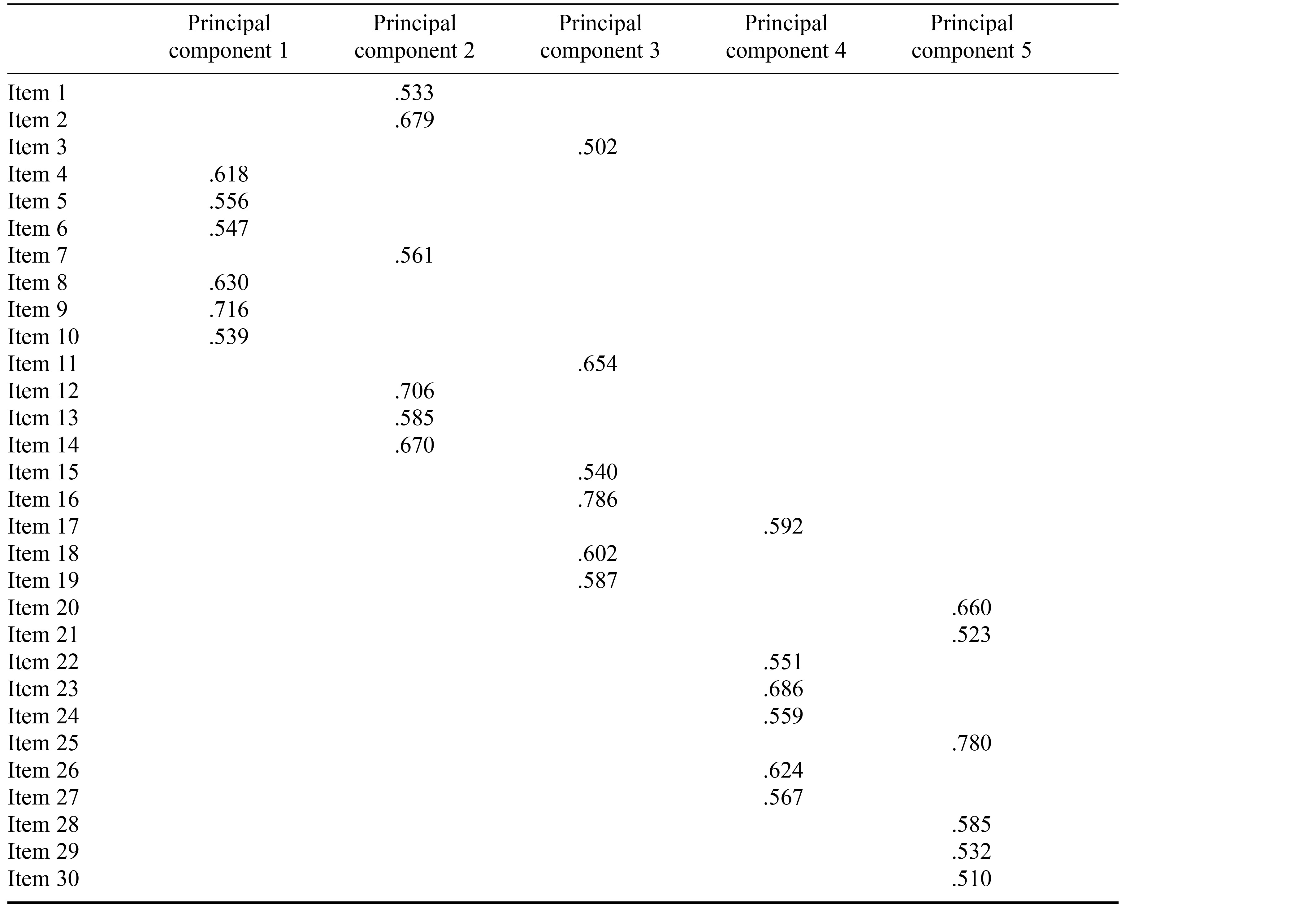

To assess the construct validity, we performed an exploratory factor analysis using SPSS 25.0 according to the criteria of Shevlin and Lewis (1999), who suggested retaining factors with eigenvalues exceeding 1, and ensuring that each factor loading is at least .30 (see also Martin & Newell, 2004; Schriesheim & Eisenbach, 1995). Furthermore, we ensured that each item in the survey corresponded to a clear and independent dimension (Büyüköztürk, 2007). Through this process, we constructed a 30-item scale for assessing cognitive outsourcing to AI. The results for the factor loadings are presented in Table 6.

Table 6. Factor Loadings for the Cognitive Outsourcing Items

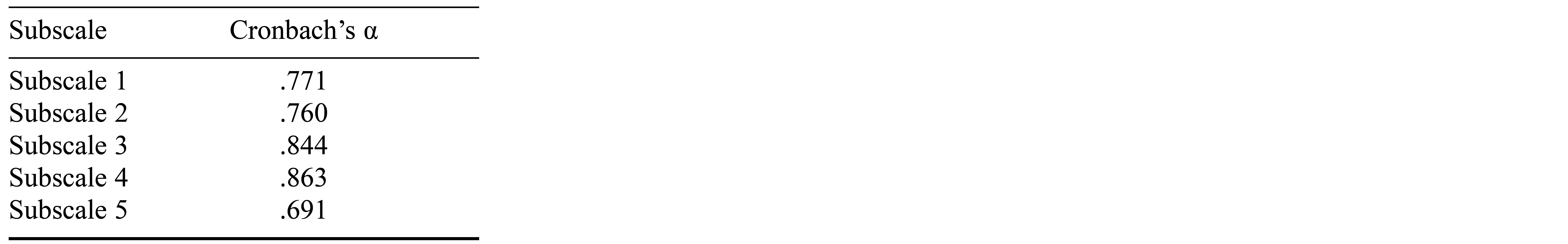

The formula for computation and the application of Cronbach’s alpha are presented in Figures 2a and 2b.

Figure 2a. Computation Formula for Cronbach's Alpha

Figure 2b. Example of Application of Cronbach’s Alpha Formula

We calculated the Kaiser–Meyer–Olkin measure of sampling adequacy to be .81, indicating good suitability for the sample, and Bartlett’s test of sphericity yielded a χ² value of 826.05 (p < .01). The cumulative explained variance showed that the five principal components together accounted for approximately 87.1% of the total variance. Among these factors, the correlation coefficients between items ranged from a minimum of −.80 to a maximum of 1.0, indicating a broad spectrum of correlations among the items, ranging from strongly negative to perfectly positive. The range of correlation coefficients between the subscale scores and the overall scale score ranged from a minimum of −.20 to a maximum of .94. This indicates that certain principal components were highly positively correlated with the overall scale score.

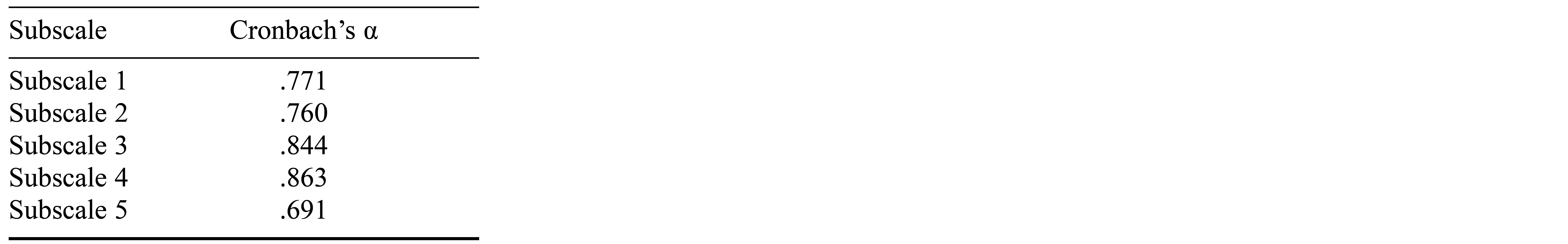

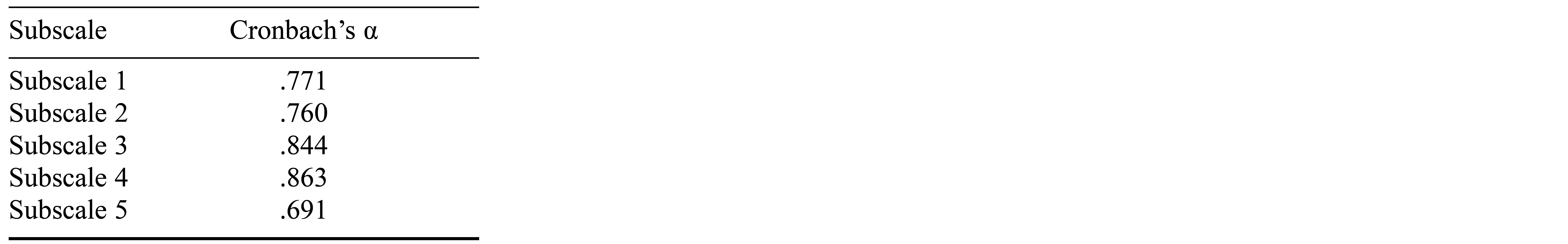

Split-half reliability was computed using the odd–even item method. The Cronbach’s alpha for the subscale composed of odd-numbered items was .77, and the subscale of even-numbered items yielded a Cronbach’s alpha of .80. The average split-half reliability based on these two subscales was calculated to be .78. Cronbach’s alpha values for each principal component are presented in Table 7.

Table 7. Cronbach’s Alpha Values for Each Subscale

Cronbach’s alpha values are generally considered acceptable when above .70 and good when exceeding .80. Therefore, the internal consistency of the subscales for cognitive outsourcing to AI ranged from good to excellent, with Subscale 4 exhibiting the highest internal consistency and Subscale 5 displaying comparatively lower consistency. In accordance with the content of the items and aided by the theoretical framework, the five factors were named unreliability, gullibility, irrationality, dependence, and cognitive autonomy.

The factor of unreliability had loadings ranging from .539 to .716, accounting for 31.4% of the total variance, and included six items. The discussion revolved around the potential for cognitive outsourcing to lead to unreliable beliefs. The factor of gullibility exhibited loadings between .533 and .706, explaining 19.7% of the total variance, and encompassed six items. It explored whether cognitive outsourcing makes individuals more susceptible to accepting incorrect information. The factor of irrationality had loadings ranging from .502 to .786, accounting for 17.6% of the total variance, and included six items. It focused on whether trusting external information sources without verification constitutes irrational behavior. The factor of dependence exhibited loadings between .551 and .686, explaining 10.5% of the total variance, and consisted of six items. It analyzed the dependence on cognitive outsourcing and its potential impact on cognitive health. The factor of cognitive autonomy had loadings ranging from .510 to .780, accounting for 8.0% of the total variance, and included six items. It examined whether cognitive outsourcing undermined individual cognitive autonomy.

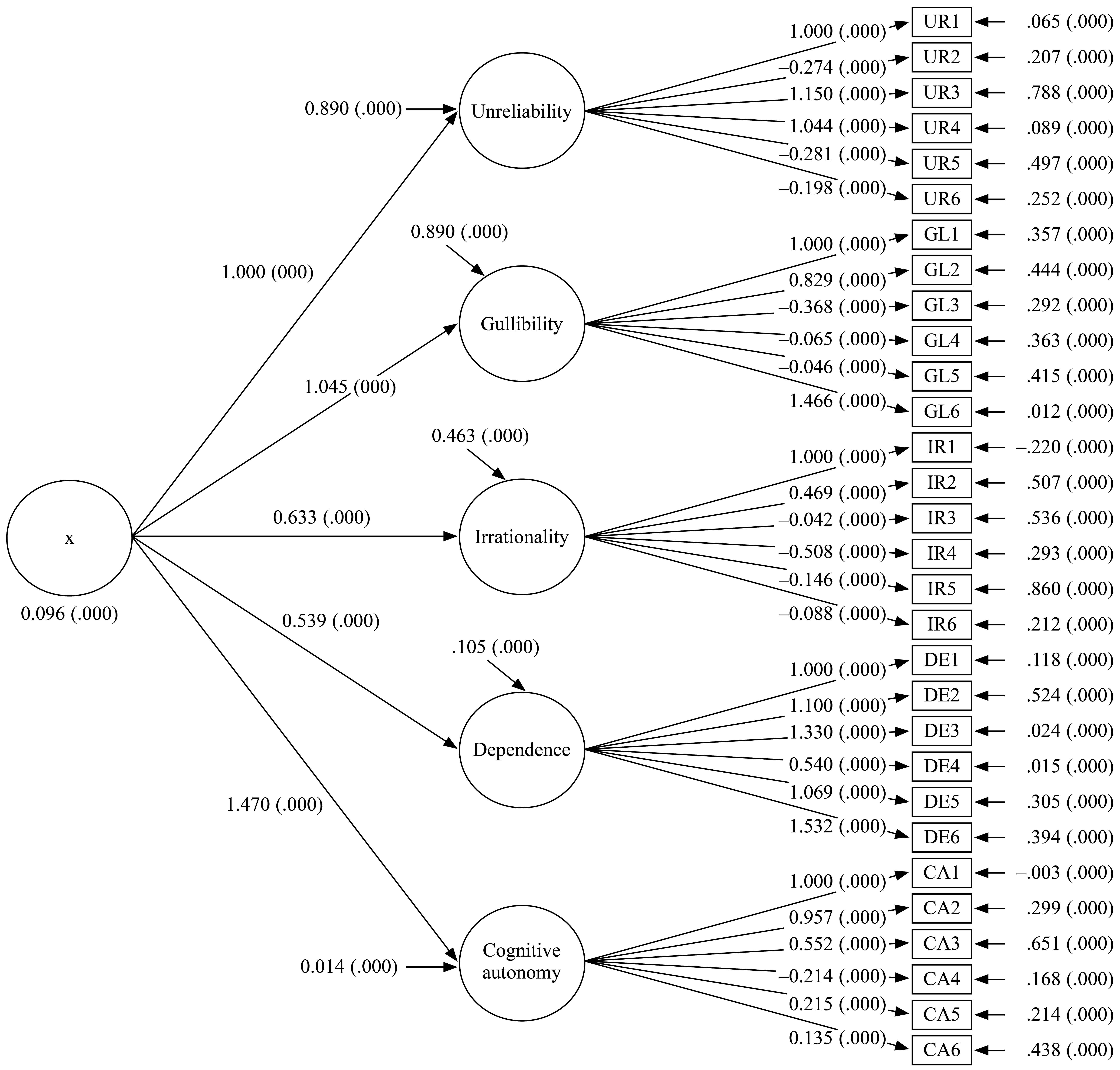

Confirmatory Factor Analysis

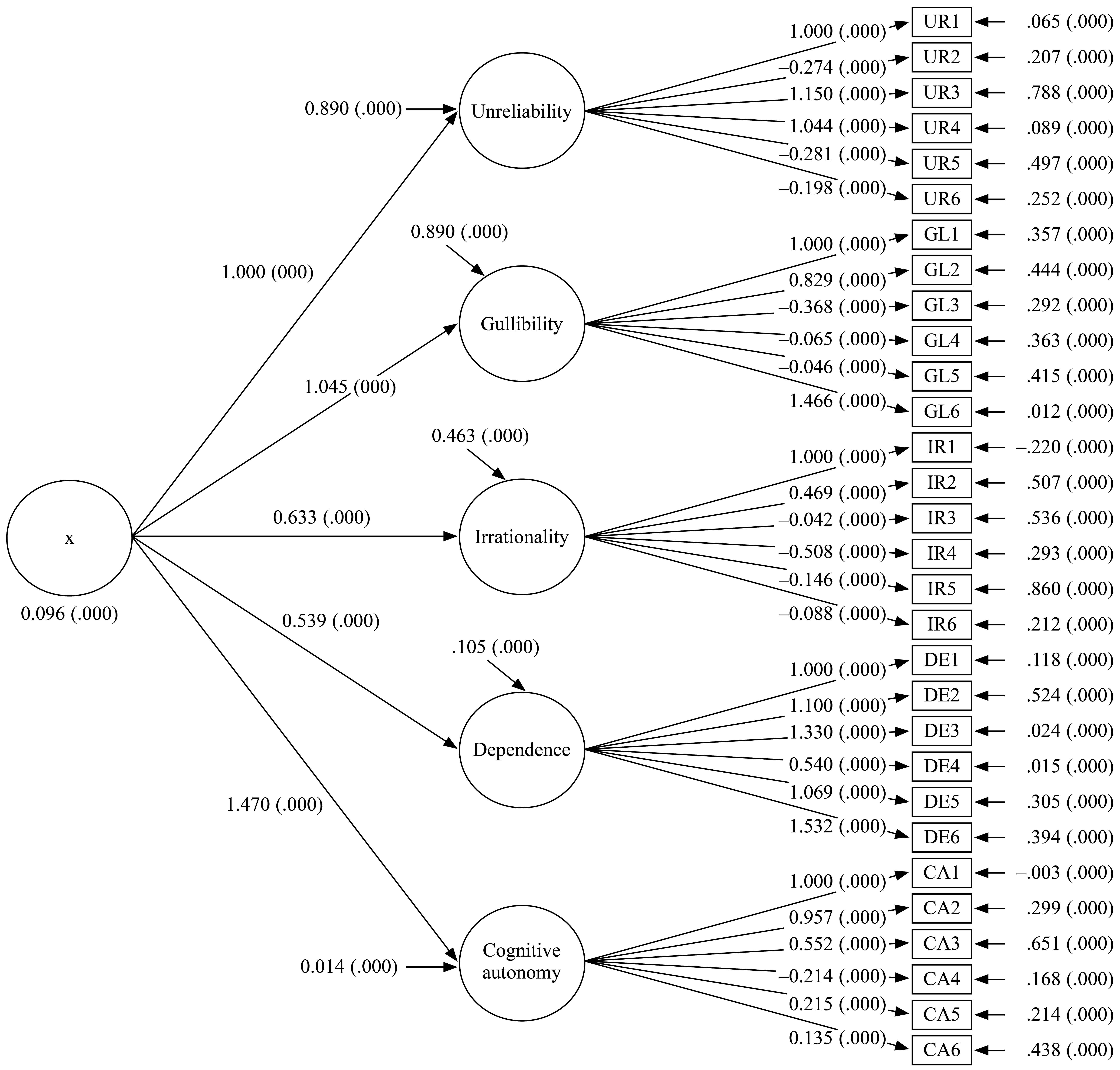

We employed Mplus 8.3 to perform a confirmatory factor analysis of the Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale, encompassing five dimensions and 30 items. The analysis utilized data from all 279 participants in the sample. The results are illustrated in Figure 3.

Figure 3. Confirmatory Factor Analysis Results for the Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale

Note. X represents the level of cognitive outsourcing.

The process outlined above was primarily utilized to examine the fit of the Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale. The results indicated an acceptable fit to the data, χ2 = 1189.765, df = 24, p < .001, comparative fit = .829, Tucker–Lewis index = .916, root-mean-square error of approximation = .081, standardized root-mean-square residual = .043. Thus, each factor in the scale could be independently assessed to determine specific attributes of cognitive outsourcing that may be more pronounced in different creative contexts. An individual’s overall score for the scale can serve as comprehensive indicator of their propensity toward cognitive outsourcing, which can be particularly useful in studies aimed at correlating these tendencies with various outcomes in creativity and innovation. This scale thus serves both as a diagnostic tool and as a predictive tool, enhancing understanding of the impact of AI in creative domains.

Discussion

Language models like ChatGPT enable creators to use AI to assist in creation, essentially offering new interactive modes between human creativity and machine intelligence (Mazzone & Elgammal, 2019). In this research we have provided a comprehensive framework and practical tools for understanding and assessing collaboration between humans and AI in the creative process, from conceptual definition and dimension identification to scale development.

The development of the Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale is particularly significant in the context of the era in which technology is intersecting with creative practice. With the widespread application of AI in the fields of science and artistic creation, the interaction between creators and AI is increasing (Amba & Singh, 2023), leading to a surge in the need to understand and evaluate this new type of creative relationship. The Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale provides a systematic methodological tool for assessing and understanding how human creators utilize AI in their creative endeavors, facilitating deep exploration into the psychological and behavioral patterns of cognitive outsourcing by researchers and practitioners. This not only promotes the integration of cognitive science and AI but also provides new experimental tools for studying the mechanisms of creative thinking, allowing researchers to gain a better understanding of how human creative thinking works and how AI influences this process, thereby advancing theories of innovative thinking.

However, the construction of this scale also has its shortcomings. For example, there is still room for improvement in the induction of theory, corpus, and items, and more recent research findings on large language models from worldwide sources should be referenced. The sample size also needs to be increased, and the scale should be updated iteratively in line with the development of large language models in the future. Nevertheless, the construction of the Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale encourages those in both academic and organizational spheres to reflect deeply on ethical issues in the context of human–machine collaboration, such as creative attribution, cognitive autonomy, and the moral boundaries of AI technology. Our scale also provides an infrastructural foundation for future research, enabling further exploration of other dimensions, influencing factors of cognitive outsourcing, and expanding the understanding of AI-assisted creation.

The Cognitive Outsourcing Behavior Toward Artificial Intelligence Scale provides an effective tool for assessing the psychological attitudes and behavioral patterns of individuals or teams in AI-assisted creation. This work offers empirical evidence and theoretical support for researching the collaborative relationship between humans and AI, optimizing human–machine interaction design, and advancing creative education and practice, holding profound academic and practical value.

References

Adavelli, M. (2024, January 2). 22 exciting WeChat statistics. Techjury.

Ahlstrom-Vij, K. (2016). Is there a problem with cognitive outsourcing? Philosophical Issues, 26(1), 7–24.

Amba, R., & Singh, H. (2023, November 22–24). The influence of the artificial intelligence based CGI on the growth of the film industry [Paper presentation]. The Seventh International Conference on Image Information Processing, Jaypee University of Information Technology, Waknaghat, Solan, India.

Bai, L., Liu, X., & Su, J. (2023). ChatGPT: The cognitive effects on learning and memory. Brain-X, 1(3), Article e30.

Büyüköztürk, Ş. (2007). Data analysis handbook for social sciences [In Turkish]. Pegem A Yayincilik.

Chiu, T. K. F., Ahmad, Z., Ismailov, M., & Sanusi, I. T. (2024). What are artificial intelligence literacy and competency? A comprehensive framework to support them. Computers and Education Open, 6, Article 100171.

Edvardsson, I., & Durst, S. (2020). The knowledge and learning potential of outsourcing. In K. Kumar & J. Davim (Eds.), Methodologies and outcomes of engineering and technological pedagogy (pp. 28–49). IGI Global.

Fellbaum, C. (2013). Obituary: George A. Miller. Computational Linguistics, 39, 1–3.

Glikson, E., & Williams Woolley, A. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management Annals, 14(2), 627–660.

Gorelik, N., Chong, J., & Lin, D. J. (2020). Pattern recognition in musculoskeletal imaging using artificial intelligence. Seminars in Musculoskeletal Radiology, 24(1), 38–49.

Huang, K.-L., Liu, Y.-C., Dong, M.-Q., & Lu, C.-C. (2024). Integrating AIGC into product design ideation teaching: An empirical study on self-efficacy and learning outcomes. Learning and Instruction, 92, Article 101929.

Hutchins, E. L., & Klausen, T. (1996). Distributed cognition in an airline cockpit. In Y. Engestrom & D. Middleton (Eds.), Cognition and communication at work (pp. 15–34). Cambridge University Press.

Kakabadse, A., & Kakabadse, N. (2005). Outsourcing: Current and future trends. Thunderbird International Business Review, 47(2), 183–204.

Liu, Z., Nersessian, N., & Stasko, J. (2008). Distributed cognition as a theoretical framework for information visualization. IEEE Transactions on Visualization and Computer Graphics, 14(6), 1173–1180.

Martin, C. R., & Newell, R. J. (2004). Factor structure of the Hospital Anxiety and Depression Scale in individuals with facial disfigurement. Psychology, Health & Medicine, 9(3), 327–336.

Mazzone, M., & Elgammal, A. (2019, February). Art, creativity, and the potential of artificial intelligence. Arts, 8(1), Article 26.

Melnyk, A. (2023). Cognitive process features of higher education students in the modern information environment [In Ukrainian]. Collection of Scientific Papers of Uman State Pedagogical University, 1, 105–117.

Nagam, V. M. (2023). Internet use, users, and cognition: On the cognitive relationships between Internet-based technology and Internet users. BMC Psychology, 11(1), Article 82.

Pariser, E. (2012). Filter bubble: How we are disenfranchised on the Internet [In German]. Carl Hanser Verlag.

Proulx, M. J., Todorov, O. S., Taylor Aiken, A., & de Sousa, A. A. (2016). Where am I? Who am I? The relation between spatial cognition, social cognition, and individual differences in the built environment. Frontiers in Psychology, 7, Article 554.

Purkhardt, S. C. (1993). Transforming social representations: A social psychology of common sense and science. Psychology Press.

Rogers, Y. (1997). A brief introduction to distributed cognition. University of Sussex.

Roumeliotis, K. I., & Tselikas, N. D. (2023). ChatGPT and open-AI models: A preliminary review. Future Internet, 15(6), Article 192.

Schriesheim, C. A., & Eisenbach, R. J. (1995). An exploratory and confirmatory factor-analytic investigation of item wording effects on the obtained factor structures of survey questionnaire measures. Journal of Management, 21(6), 1177–1193.

Shevlin, M. E., & Lewis, C. A. (1999). The revised Social Anxiety Scale: Exploratory and confirmatory factor analysis. The Journal of Social Psychology, 139(2), 250–252.

Song, H., Wei, J., & Jiang, Q. (2023). What influences users’ intention to share works in designer-driven user-generated content communities? A study based on self-determination theory. Systems, 11(11), Article 540.

Tufekci, Z. (2018). Twitter and tear gas: The power and fragility of networked protest. Yale University Press.

Turkle, S. (1995). Life on the screen: Identity in the age of the internet. Simon & Schuster.

Ward, L. (2003). Synchronous neural oscillations and cognitive processes. Trends in Cognitive Sciences, 7(12), 553–559.

Wei, Q. (2024). The effects of the AIGC software on English majors: Professional competence, individual perceptions, and career planning. Journal of Education, Humanities and Social Sciences, 32, 67–73.

Wells, A., & Matthews, G. (1996). Modelling cognition in emotional disorder: The S-REF model. Behaviour Research and Therapy, 34(11–12), 881–888.

Wu, X., & Zhao, T. (2020). Application of natural language processing in social communication: A review and future perspectives [In Chinese]. Computer Science, 47(6), 184–193.

Zhang, Z. (2021). Concept, dimensions, and scale development of gamebullying [In Chinese]. Chinese Journal of Journalism & Communication, 43(10), 69–97.

Adavelli, M. (2024, January 2). 22 exciting WeChat statistics. Techjury.

Ahlstrom-Vij, K. (2016). Is there a problem with cognitive outsourcing? Philosophical Issues, 26(1), 7–24.

Amba, R., & Singh, H. (2023, November 22–24). The influence of the artificial intelligence based CGI on the growth of the film industry [Paper presentation]. The Seventh International Conference on Image Information Processing, Jaypee University of Information Technology, Waknaghat, Solan, India.

Bai, L., Liu, X., & Su, J. (2023). ChatGPT: The cognitive effects on learning and memory. Brain-X, 1(3), Article e30.

Büyüköztürk, Ş. (2007). Data analysis handbook for social sciences [In Turkish]. Pegem A Yayincilik.

Chiu, T. K. F., Ahmad, Z., Ismailov, M., & Sanusi, I. T. (2024). What are artificial intelligence literacy and competency? A comprehensive framework to support them. Computers and Education Open, 6, Article 100171.

Edvardsson, I., & Durst, S. (2020). The knowledge and learning potential of outsourcing. In K. Kumar & J. Davim (Eds.), Methodologies and outcomes of engineering and technological pedagogy (pp. 28–49). IGI Global.

Fellbaum, C. (2013). Obituary: George A. Miller. Computational Linguistics, 39, 1–3.

Glikson, E., & Williams Woolley, A. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of Management Annals, 14(2), 627–660.

Gorelik, N., Chong, J., & Lin, D. J. (2020). Pattern recognition in musculoskeletal imaging using artificial intelligence. Seminars in Musculoskeletal Radiology, 24(1), 38–49.

Huang, K.-L., Liu, Y.-C., Dong, M.-Q., & Lu, C.-C. (2024). Integrating AIGC into product design ideation teaching: An empirical study on self-efficacy and learning outcomes. Learning and Instruction, 92, Article 101929.

Hutchins, E. L., & Klausen, T. (1996). Distributed cognition in an airline cockpit. In Y. Engestrom & D. Middleton (Eds.), Cognition and communication at work (pp. 15–34). Cambridge University Press.

Kakabadse, A., & Kakabadse, N. (2005). Outsourcing: Current and future trends. Thunderbird International Business Review, 47(2), 183–204.

Liu, Z., Nersessian, N., & Stasko, J. (2008). Distributed cognition as a theoretical framework for information visualization. IEEE Transactions on Visualization and Computer Graphics, 14(6), 1173–1180.

Martin, C. R., & Newell, R. J. (2004). Factor structure of the Hospital Anxiety and Depression Scale in individuals with facial disfigurement. Psychology, Health & Medicine, 9(3), 327–336.

Mazzone, M., & Elgammal, A. (2019, February). Art, creativity, and the potential of artificial intelligence. Arts, 8(1), Article 26.

Melnyk, A. (2023). Cognitive process features of higher education students in the modern information environment [In Ukrainian]. Collection of Scientific Papers of Uman State Pedagogical University, 1, 105–117.

Nagam, V. M. (2023). Internet use, users, and cognition: On the cognitive relationships between Internet-based technology and Internet users. BMC Psychology, 11(1), Article 82.

Pariser, E. (2012). Filter bubble: How we are disenfranchised on the Internet [In German]. Carl Hanser Verlag.

Proulx, M. J., Todorov, O. S., Taylor Aiken, A., & de Sousa, A. A. (2016). Where am I? Who am I? The relation between spatial cognition, social cognition, and individual differences in the built environment. Frontiers in Psychology, 7, Article 554.

Purkhardt, S. C. (1993). Transforming social representations: A social psychology of common sense and science. Psychology Press.

Rogers, Y. (1997). A brief introduction to distributed cognition. University of Sussex.

Roumeliotis, K. I., & Tselikas, N. D. (2023). ChatGPT and open-AI models: A preliminary review. Future Internet, 15(6), Article 192.

Schriesheim, C. A., & Eisenbach, R. J. (1995). An exploratory and confirmatory factor-analytic investigation of item wording effects on the obtained factor structures of survey questionnaire measures. Journal of Management, 21(6), 1177–1193.

Shevlin, M. E., & Lewis, C. A. (1999). The revised Social Anxiety Scale: Exploratory and confirmatory factor analysis. The Journal of Social Psychology, 139(2), 250–252.

Song, H., Wei, J., & Jiang, Q. (2023). What influences users’ intention to share works in designer-driven user-generated content communities? A study based on self-determination theory. Systems, 11(11), Article 540.

Tufekci, Z. (2018). Twitter and tear gas: The power and fragility of networked protest. Yale University Press.

Turkle, S. (1995). Life on the screen: Identity in the age of the internet. Simon & Schuster.

Ward, L. (2003). Synchronous neural oscillations and cognitive processes. Trends in Cognitive Sciences, 7(12), 553–559.

Wei, Q. (2024). The effects of the AIGC software on English majors: Professional competence, individual perceptions, and career planning. Journal of Education, Humanities and Social Sciences, 32, 67–73.

Wells, A., & Matthews, G. (1996). Modelling cognition in emotional disorder: The S-REF model. Behaviour Research and Therapy, 34(11–12), 881–888.

Wu, X., & Zhao, T. (2020). Application of natural language processing in social communication: A review and future perspectives [In Chinese]. Computer Science, 47(6), 184–193.

Zhang, Z. (2021). Concept, dimensions, and scale development of gamebullying [In Chinese]. Chinese Journal of Journalism & Communication, 43(10), 69–97.